The Berkeley mixed reality stroke rehabilitation project is under Professor Sanjit A. Seshia's research group and partners with UCSF and Stanford hospitals. The goal of the project is to empower therapists and patients to conduct therapy sessions remotely using MR headsets like the Meta Quest 3 and create tailored exercise programs that report patient progress to therapists. This fulfills a vital need as patient waitlists for in-person therapy can last for months and many patients face locational barriers getting to clinics. My role as a researcher in the project is to lead and project manage the frontend, backend, and Unity applications.

As project lead, I managed a team of 3-5 developers, including both student researchers and professional developers, coordinating tasks and timelines to ensure milestones were met. I designed and implemented systems across backend (Go, AWS), frontend (React, Typescript), and Unity (C#) codebases, integrating Agora video calling, Clerk authentication, AWS App runner & databases, and multi-provider AI (Gemini, OpenAI, Bedrock). I also prototyped the novel rehabilitation system using multimodal AI to generate and monitor personalized therapy exercises.

Key Features

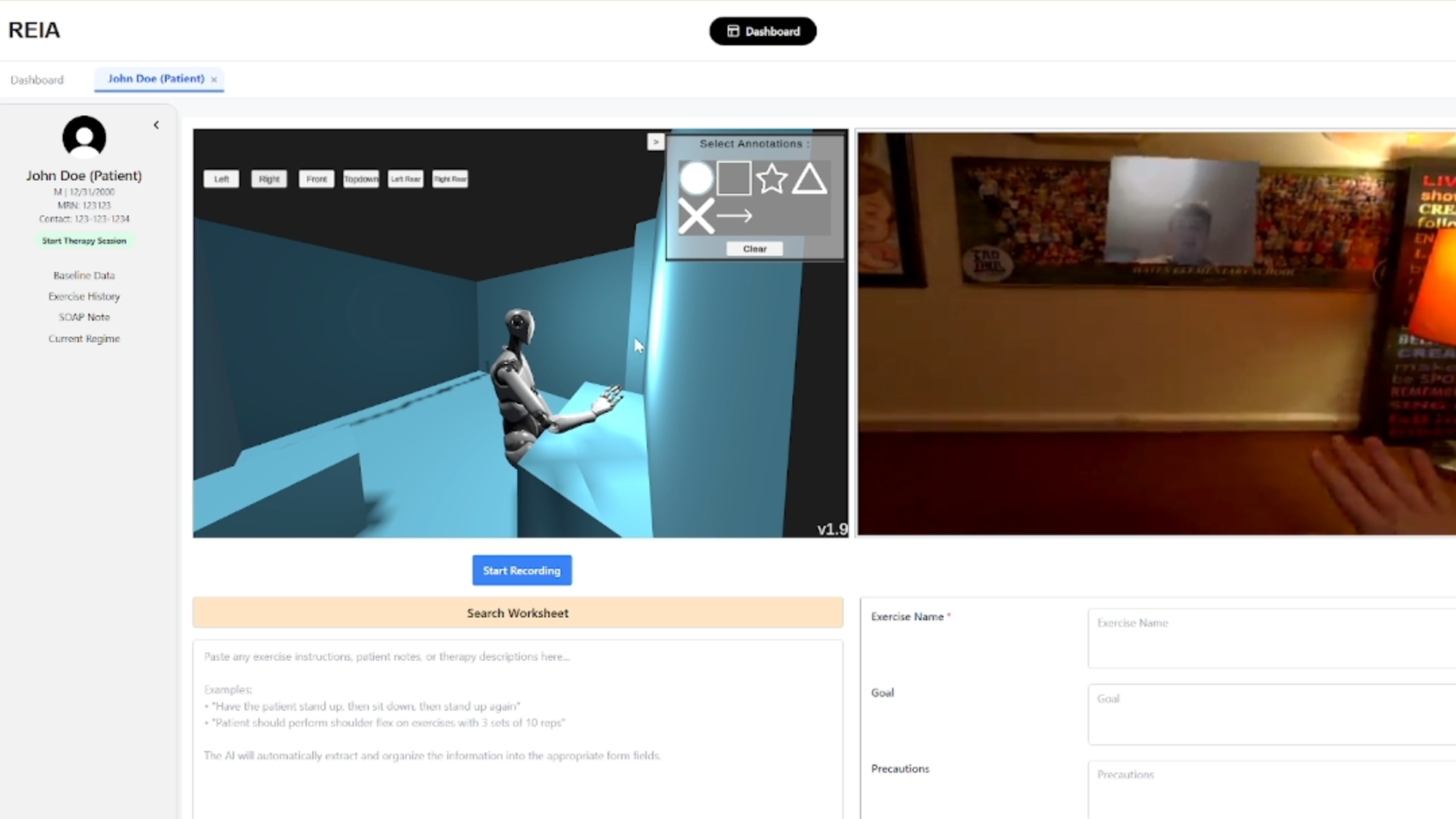

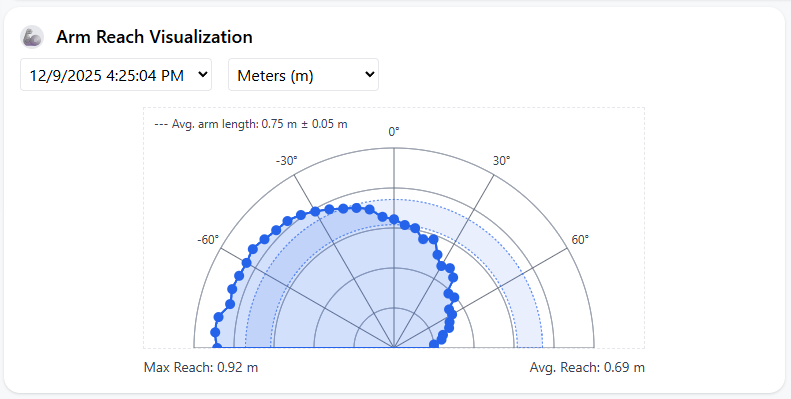

- Remote therapy sessions conducted via video call between therapists on the web portal and patients on MR headset, providing therapists with a first-person view, 3D environment visualization, and real-time 3rd person pose reconstruction.

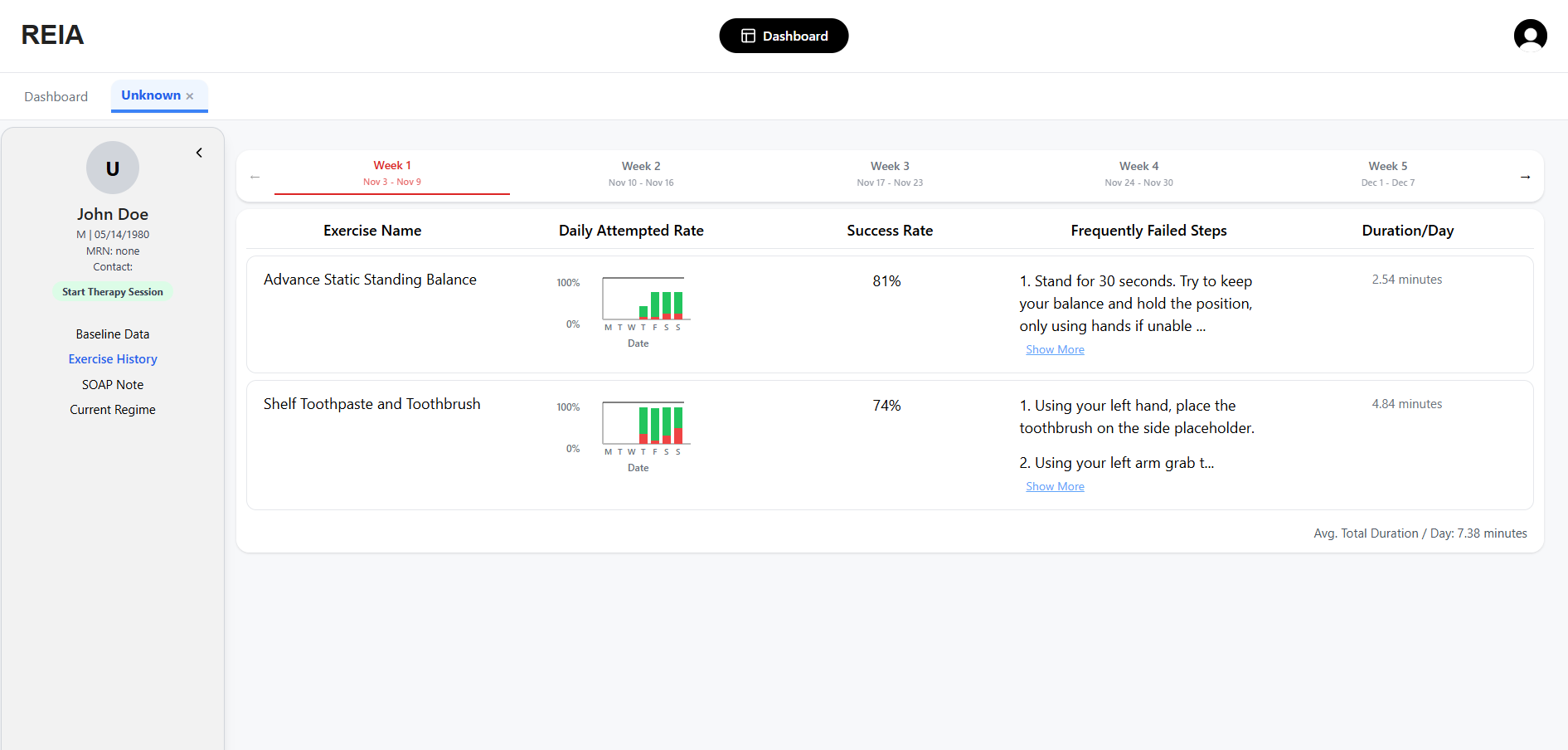

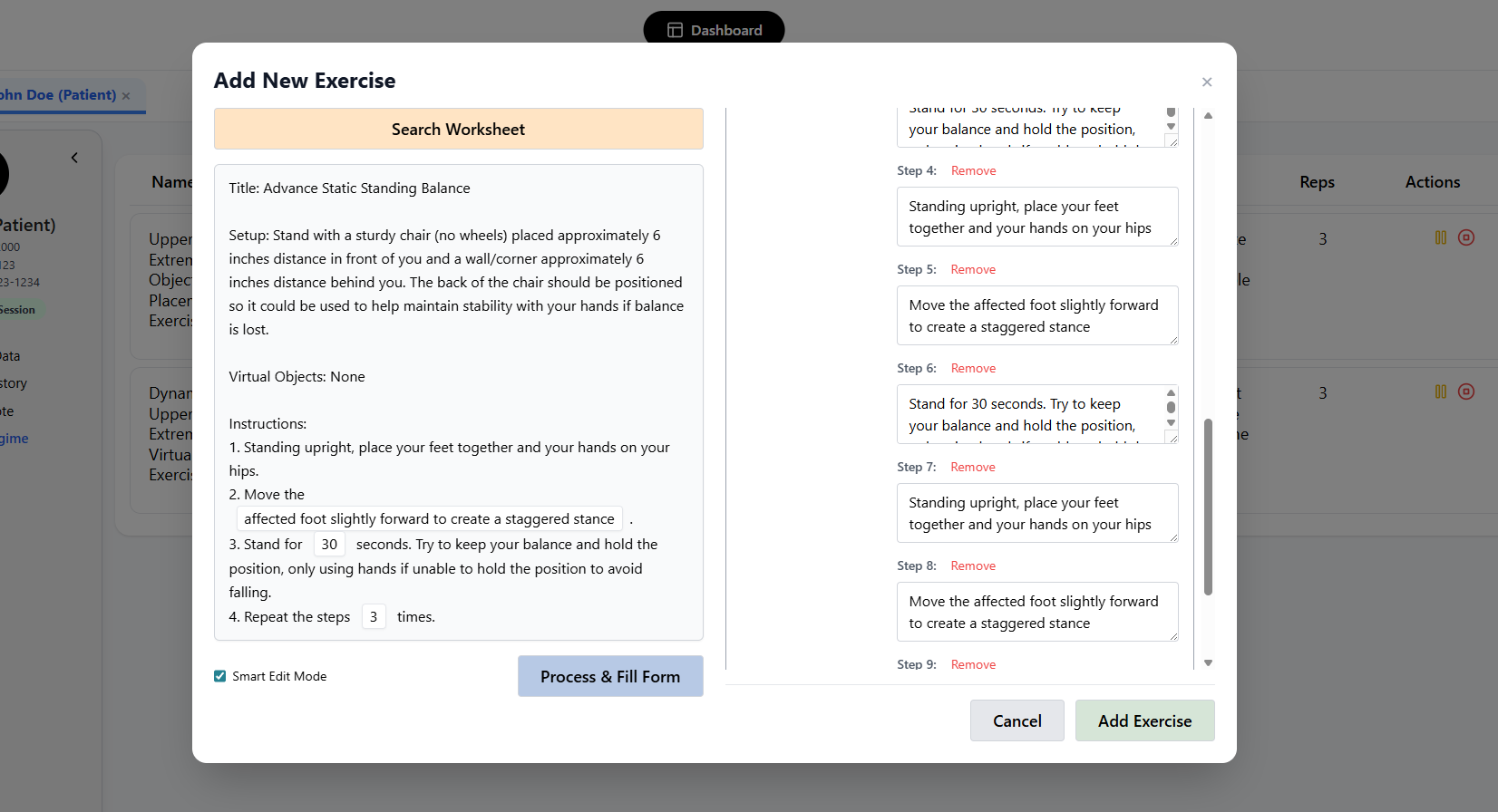

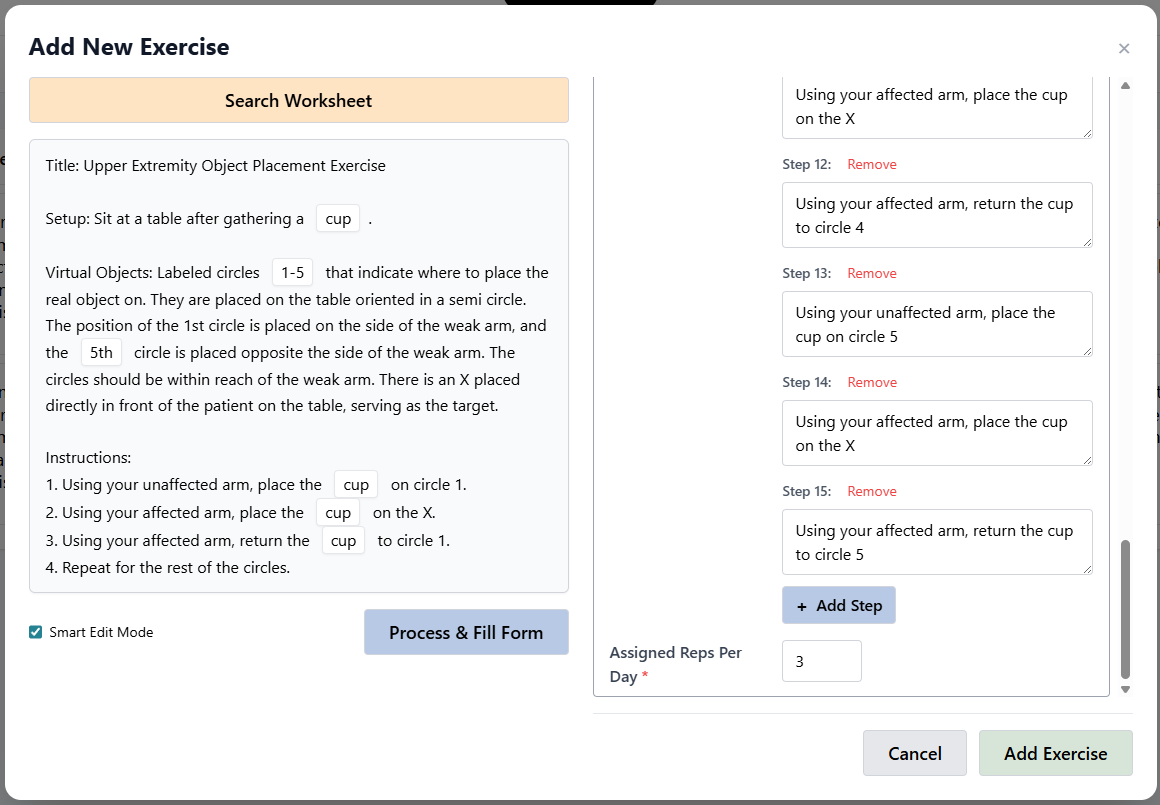

- Exercise programs created using natural language worksheets or recorded transcripts during therapy sessions, tailored to individual patient capabilities with automatic progress logging for therapist review.

- Multimodal AI system that evaluates exercise performance by combining body pose checking (e.g., standing, raising arm), vision model queries for functional tasks (e.g., picking up cup), and utility functions (e.g., touching virtual circle) to determine step completion.

- End-to-end HIPAA compliant system ensuring patient data privacy and security throughout therapy sessions.